Our CI/CD Journey -- from Monolith to Micro-Services

** 📘 in collaboration with our Lead DevOps, Zackky Muhammad**

Migrating from monolith to micro-services is not just about how do we analyse and just code it but also how we can deploy it. 2 years since I join Kitabisa and we’re still have a big adventures on this. Complex, much work to do and yet exciting! This is our journey so far.

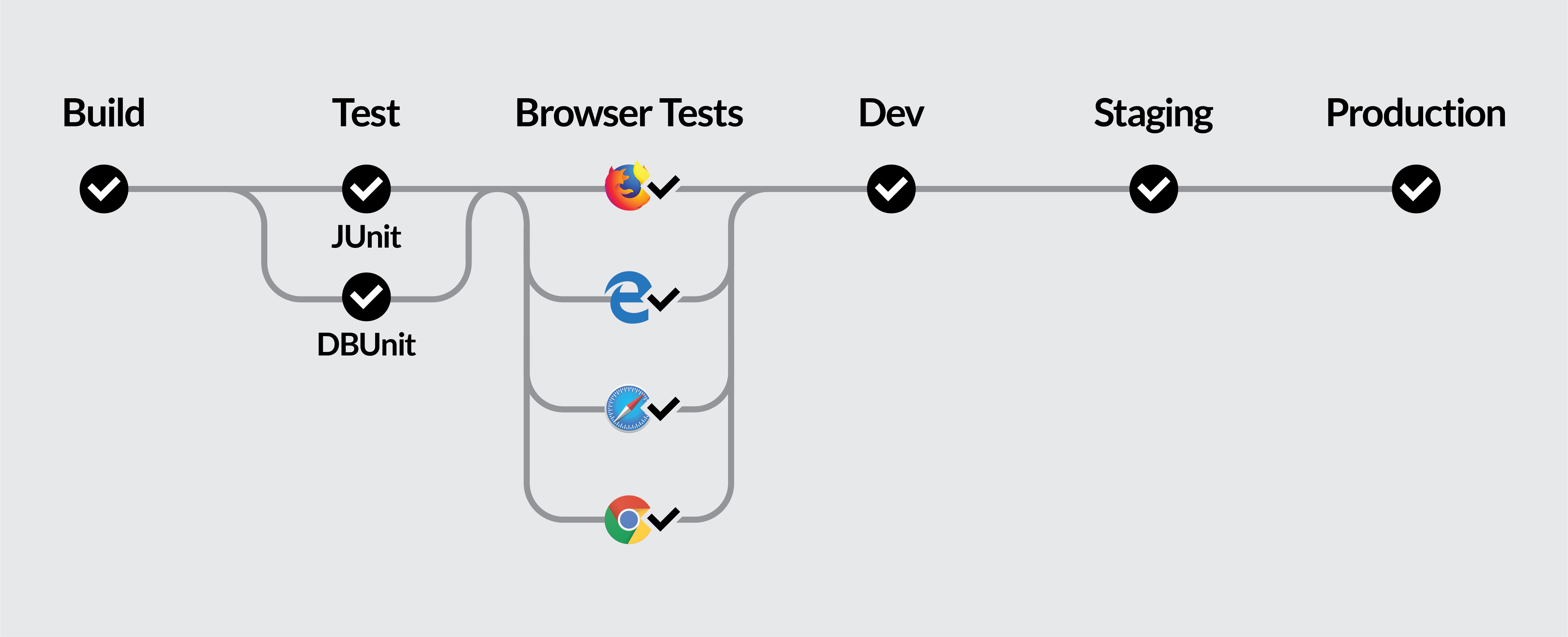

source: https://www.linode.com/docs/development/ci/introduction-ci-cd/

p.s: image above is not our current CI/CD, it’s just an example 😁

Laravel Forge

On monolith era, as it based on PHP we are using Laravel Forge to handle the deployment. The deployment script separate our monolith to 4 functions: the main user-facing product kitabisa.com, the api that used by our mobile app, the cron and the internal tools. All done whenever a push is happen to our master branch. A manual thing is still need to do before tough, edit the config file when needed. The pseudo code is quite like this.

1. git pull origin master

2. copy index & config from private bucket

3. composer install

4. restart php fpm (as we are using OPCache)

The Legacy Story

We are using one server only to serve our app at first (well, it’s obvious) and the scaling is just vertical. Our app is stateful. At that time on Digital Ocean, once we do the scale up, we can’t undo to scale it down and as Kitabisa traffic is sometimes unpredictable, especially when big things happen (e.g. Palu Earthquake at 2018), the resource become unused when the event is done overtime. Traffic back to normal, but not for the cost.

We go to multi-server, place the state to redis so that our app become stateless and adding the load balancer. The scaling is manual, and spin-up new server is slow. Usually late to handle sudden big traffic. Digital Ocean is cheap, but not scalable.

Jenkins

We’re trying to build our first micro-service and it’s start with payment service. As this is build with Go, our infra decide to try the deployment pipeline with Jenkins. We’re also start optimising AWS cloud instead using our current Digital Ocean. AWS ECS is our choice for this time seeing that this is our first service and we want to containerised it. We’re using staging branch strategy here, so everything that push to the staging automatically build & deployed to our staging environment.

The build is doing the go dep / glide (go modules is not officially released yet), go build then make it to docker image. Then push the image to ECR (Container Registry), then update Task Definition to deploy the image.

Task Definition: this from AWS ECS, it’s like config file for deployment.

For production, there’s still need human touch to click the “Build” and “Run” action to deploy it after the code is pushed to master. We want to prevent human error risk after all if deployment done automatically with just merging PR. The second Go-based service is come, and this Jenkins is still our solution. This service comes with our changing to database migration too, but we will tell you about it later.

CircleCI and GKE

Our services is starting to grow gradually and using Jenkins is out of option as we need to maintain it ourselves and we want to keep a lean team (just 2 strong men for DevOps!). We may also not using best practice when starting the CI/CD with it and it becomes “dirty”. So we need the managed one and we choose CircleCI. We got introduced about Kubernetes (K8s) from Gojek and found out that K8s is not used only for big applications, so here comes the Kubernetes era at Kitabisa.

We’re also using helm to make life easier — well, who is not using helm when it comes to Kubernetes these days? Have you ever using brew, apt, npm, chocolatey on your macOS, Linux or Windows ? It is quite the same with helm for K8s with more feature not just package manager.

In CircleCI it’s all running within the container, so the host is “clean”. These are the steps we do to prepare our CI/CD on CircleCI, the steps may similar between any managed CI/CD services.

- Create CircleCI orbs. It’s jobs, a collection of steps that CircleCI will do on the pipeline. E.g.: build the code, test it, create image, etc.

- Connect CircleCI to our Github, so that CircleCI can watch event which will trigger the jobs.

- Connect CircleCI with our infra on GCP through gcloud CLI. With this we can also get our K8s’s context.

- Setup CircleCI context, for separating our environments: development, staging, UAT and production.

- Make template (called Charts) with helm, so that any common things need to install can be simplified. Helm will compile the template, build the manifest, then do apply to K8s.

With all that ready, engineer can just easily write the .yaml file on the repository. Put the memory & cpu needed for each service, the replica, whether the service is published or just accessed internally, we need to just write on the files for each environment. At this time, we’re still using the staging branch strategy. We also now have development and UAT environment. Anything that pushed to dev-* branch will be deployed to development env, staging branch to staging env and master branch to UAT. For production, we just need to build the release tag.

Why not stay with AWS ECS

As stated that we want to keep a lean team for our DevOps, found out that ECS is not quite easy to implement. Hard to automate, research is limited, and too much dependent to another AWS services so it need to be setup one by one. It’s just not what we expect from K8s. While on GKE, we can do all the setup just within this one service.

We were also have experienced our service is down caused by the container can send the log to our log collector and somehow it is hard & slow to spin it up again.

What’s Next?

Deployment becoming more complex and now we’re trying to implementing our CI/CD using Github Actions. We’re still on POC to some services, so the story is not completed yet. We’ll back at you when it’s all done.